Newswise — Application data requirements vs. available network bandwidth has been the ongoing Battle of the Information Age, but now it appears that a truce is within reach, based on new research from NJIT Associate Professor Jacob Chakareski.

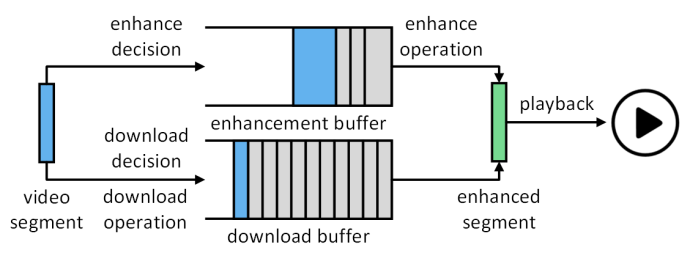

Chakareski and his team, collaborating with peers from University of Massachusetts-Amherst, devised a system to make network requests err on the side of smallness and upscale the difference through a neural network running on the receiving hardware.

They call it BONES — Buffer Occupancy-based Neural-Enhanced Streaming — which will be presented at the ACM Sigmetrics conference in Venice, Italy this summer, where only about 10% of submitted papers are accepted.

“Accessing high-quality video content can be challenging due to insufficient and unstable network bandwidth … neural enhancement have shown promising results in improving the quality of degraded videos through deep learning,” they stated. Employing a mathematical function known as a Lyapunov optimization, “Our comprehensive experimental results indicate that BONES increases quality-of-experience by 4% to 13% over state-of-the-art algorithms, demonstrating its potential to enhance the video streaming experience for users.”

“People have thought about this before. But this is the first work where this is mathematically characterized and made sure that it fits within the latency constraints. People have talked of this idea of super-resolving data,” Chakareski elaborated. “The client carries out rate scheduling and computation scheduling decisions together. It is key to the approach. This has not been done before.”

“We have a prototype that we built, so the results that are shown in the paper are based on the prototype. And it runs really well. The results are equally as good as those that we were able to observe through simulations,” he said. The team is also sharing its code and data in public.

A proof-of-concept application is in the works. The BONES team is working with University of Illinois Urbana-Champaign on a mixed-reality project called MiVirtualSeat: Semantics-Aware Content Distribution for Immersive Meeting Environments, which faces the network challenges that BONES addresses.

Chakareski said he’s hopeful that popular video conferencing services may also adopt the method. “I think there will be a push for that, because neural computation is becoming something. You hear a lot about machine learning in different domains and this could be one more application where it could be used. We haven't thought about commercializing the technology, but this is certainly something that one could pursue, and we may pursue.”

“There is this continuous race between the quality of the content and the capabilities of the network. As long as they both go side-by-side, this will always be an issue.”