Newswise — UPTON, N.Y. — In February, the U.S. Department of Energy (DOE), in cooperation with OpenAI, an American artificial intelligence research company, launched the first-of-its-kind 1,000 Scientist AI Jam Session. As one of nine host sites throughout the DOE national laboratory complex, DOE’s Brookhaven National Laboratory saw more than 120 scientists challenge and evaluate the capabilities of OpenAI’s latest step-based logical reasoning AI model built for complex problem solving. Overall, more than 1,600 scientists across the DOE complex participated in the event. Such partnerships between the DOE labs and industry are increasingly important for maintaining the nation’s technological edge, especially in the globally competitive AI research area.

“One of our country’s greatest assets – and an envy of the world – is the Department of Energy’s network of national laboratories, which for decades have driven breakthroughs in science and technology, strengthened national security, and fueled American prosperity,” said U.S. Secretary of Energy Chris Wright who attended the event at DOE’s Oak Ridge National Laboratory. “Like the Manhattan Project, which brought together the world’s best scientists and engineers for a patriotic effort that changed the world, AI development is a race that the United States must win. Today’s collaboration of America’s national labs and technology companies is an important step in our efforts to secure America’s future.”

Experimenting to enhance experiments

From Brookhaven’s training room, researchers representing the Lab’s many mission-critical research areas interacted with OpenAI’s most advanced generative pre-trained Transformer, or GPT, models, including the o3-mini-high and o1 pro, to ascertain their viability as useful “research assistants.” OpenAI’s models, such as the popularly used ChatGPT, are large language models (LLMs) trained on massive datasets to understand human language nuance. This enables them to be responsive on a range of subjects, generating text or other content depending on user prompts. The “o” series models used for the AI Jam essentially were “stress tested” on its multi-step problem solving and code generation abilities, especially when applied to diverse science.

“Products like ChatGPT, general-purpose LLMs as a service, have significantly changed the perception of and access to state-of-the-art AI methods for people who are not AI experts,” said , the AI theory and security group lead at CDS, who participated in the AI Jam. “They have also lowered the barrier to entry of applying AI to scientific problems, which is part of what the AI Jam Session was about. Several domain scientists at Brookhaven Lab and elsewhere have already been applying these tools in their work, but the Jam Session offered a guided approach to doing so with the latest models, particularly the latest simulated reasoning models, which are expected to be more useful for scientists.”

Scientists from the (NSLS-II), a DOE Office of Science user facility at Brookhaven Lab, used the AI Jam Session to explore how the models could help solve a variety of complex problems, especially in ways that would save time, optimize data analysis, and explore detailed simulations. Their projects spanned biological sample imaging, spectroscopy data analysis, workflows to facilitate multimodal research, experiment automation, and even calculations for potential accelerator upgrade designs.

, lead beamline scientist on the (PDF) beamline at NSLS-II, used Open AI’s models to build Python functions that can interpolate and extrapolate diffraction data at various sample-to-detector distances on a beamline using measurement data from a few known distances. The PDF beamline can adjust the sample-to-detector distance from 200 millimeters to 3,500 millimeters with high precision. Shorter distances capture a broader angular range with compressed peaks, offering a dense overview of many features at once. In contrast, longer distances yield a higher resolution with more spread-out peaks, providing a sharper and more detailed view within a narrower angular range.

“My ultimate goal was to combine the best of both worlds by simulating a diffraction pattern that spans a large angular range with high angular resolution leveraging a few measured data sets,” explained Abeykoon. “I developed an early-stage script, with assistance from OpenAI, that interpolates and extrapolates diffraction data for a chosen sample-to-detector distance, using data from known distances. While the initial results are promising, the script still requires further refinement, testing, and validation using both synthetic and real data ensuring that all realistic data acquisition conditions are adequately addressed. This enhancement remains one of my primary goals moving forward.”

, a scientist in the NSLS-II Accelerator Division’s Insertion Devices group, used his time at the Jam to challenge these models in applications related to brightness optimization at the light source.

“My first impression was that these AI models were very capable of writing code to create useful tools,” recalled Hidas. “After getting a feel for those features, I started to test logic and reason. I’ve been asking the models to do related math problems that get incrementally harder. I’ve found that the results varied a bit across models. They were capable of doing some tasks for me that could save a lot of time, but there is still some work to be done before it can tackle some of the more difficult problems we’re working on.”

Hidas was not alone in finding that the “o” series models could not easily navigate the truly complex science that Lab researchers confront every day in their work – at least not without a little human touch. , a senior researcher with the Systems, Architectures, and Emerging Technologies department at CDS, examined code development for new physical processes in the open-source physics simulation package, G4CMP. The code simulates the transport of phonons and electron/hole pairs in silicon and other materials.

“My goal was to attempt a simple encoding of quasiparticle dynamics as a test of ChatGPT’s capabilities,” Baity explained. “The problem was carefully selected to be a difficult but still benchmarkable task. The model was quick to generate the Geant4 particle definition code files, although it made an initial error in defining the spin of the particle. However, on query, the model was unable to properly determine which files should use the new particle definitions. Eventually, I was able to point it toward the specific relevant code files for writing phonon-quasiparticle interactions. After a fair bit of troubleshooting errors that took essentially the rest of the day, I managed to generate a code to model the phonon-quasiparticle interactions. The AI model is useful for providing bare-bones codes for highly specific tasks from scratch or troubleshooting minor errors, but, for moderately complex codes or tasks, it likely takes as long to prompt engineer the model.”

, a research associate also with CDS Systems, Architectures, and Emerging Technologies, took the opportunity to ask the model to deliver insights and new solutions to an existing computing performance problem without providing any data.

“I didn’t accomplish what I set to do. However, for information collecting and summarization capabilities, the model did super well,” Hsu said. “Prompt engineering still plays the critical role toward getting satisfactory or expected responses. I feel asking AI tools to do well-defined tasks in small scope is really helpful and saves time.”

Hsu noted he would like to see more of these types of collaborations between industry and the national labs that assess how AI can accelerate scientific discovery.

“I would love to participate in another one, and I want to see if I can twist my prompting strategy as well as having more experience on various tools,” he added.

, an assistant computational scientist with the Trustworthy AI Group at CDS, echoed that sentiment.

“If I participate in another AI Jam, I might change the topic from physics questions to coding questions and get more experience using the LLM to help or improve my coding,” she said.

She worked on simulating the X-ray structure factor of water to see if incorporating electron density information from Wannier centers could increase the agreement between simulation and experiment. Cao found it was “partially workable.”

“The LLM can generate X-ray atomic form factors, which agree with the values in databases,” she added. “The LLM also can explain the general workflow of calculating structure factors of water. However, as there are not many previous studies about incorporating electron density information, the LLM touched this topic but did not really solve it. In the end, we did not successfully solve the problem. But that’s not too disappointing or surprising because it is a hard problem that has not been solved before. We were happy that the LLM gives reasonable answers related to questions about X-ray structure factors and atomic form factors.”

Meanwhile, scientists at the Laboratory for BioMolecular Structure (LBMS) used the Jam Session to investigate how AI could enhance the research done at their cryo-EM facility, especially for improving the imaging of biological samples with nanometer resolution.

“The AI models we had access to included helpful features that aren’t available in the free versions,” said , scientific operations director of LBMS. “We were impressed at how the simulated reasoning models began to provide information that we didn’t initially consider as we were working. There were some publications we asked about that they didn’t have information on, so ensuring that these models have current research specific to various scientific fields could be a way to improve AI systems for scientists.”

In addition to CDS, NSLS-II, and LBMS, Brookhaven researchers from the Center for Functional Nanomaterials (CFN), Condensed Matter Physics and Materials Science Department, Environmental Science and Technologies Department, Collider-Accelerator Department, Instrumentation Department, and the Electron-Ion Collider participated in the day-long event.

Where do scientists go from here?

While the 1,000 Scientist AI Jam Session offered the first hands-on pass at determining how GPT models can impact the pace and quality of scientific discovery, the next steps will include a lab-level review and aggregation among all nine participating DOE national labs: Brookhaven, Argonne National Laboratory, Lawrence Berkeley National Laboratory, Idaho National Laboratory, Lawrence Livermore National Laboratory, Los Alamos National Laboratory, Oak Ridge National Laboratory, Pacific Northwest National Laboratory, and Princeton Plasma Physics Laboratory. Resulting feedback will be shared with developers like OpenAI and Anthropic, both based in San Francisco, Calif. Anthropic offered several labs access to its Claude 3.7 Sonnet model during the AI Jam to create AI systems specifically for scientific needs.

Locally, the results and conversations that came out of this collaborative event have already started to make an impact on individual facilities within the Lab. For instance, a few of the teams from NSLS-II have started planning a project to develop a new powder diffraction simulation code based on their experience at the AI Jam.

“What I personally got out of the event was greater visibility into the types of problems Brookhaven Lab scientists are interested in applying AI to and some early indications of the state of these efforts,” Soto said. “These are early steps. As models improve and scientists become more proficient at framing their questions and tasks in ways that the models can successfully tackle, I anticipate many opportunities for making real and significant advances in research.”

Brookhaven National Laboratory is supported by the Office of Science of the U.S. Department of Energy. The Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit .

Follow @BrookhavenLab on social media. Find us on , , , and .

MEDIA CONTACT

Register for reporter access to contact detailsArticle Multimedia

Credit: Kevin Coughlin/Brookhaven National Laboratory

Caption: Paul Baity, from Brookhaven Lab's Computing and Data Sciences Directorate, previously used ChatGPT for small-scale Python code generation, noting "the model is good at this task with the caveat that it sometimes makes mistakes." He spent the AI Jam working on code development for quasiparticle dynamics processes.

Credit: Kevin Coughlin/Brookhaven National Laboratory

Caption: Chuntian Cao (left), from Brookhaven Lab's Computing and Data Sciences Directorate, worked with Deyu Lu (right), from the Center for Functional Nanomaterials, on simulations related to the X-ray structure factor of water that included electron density information.

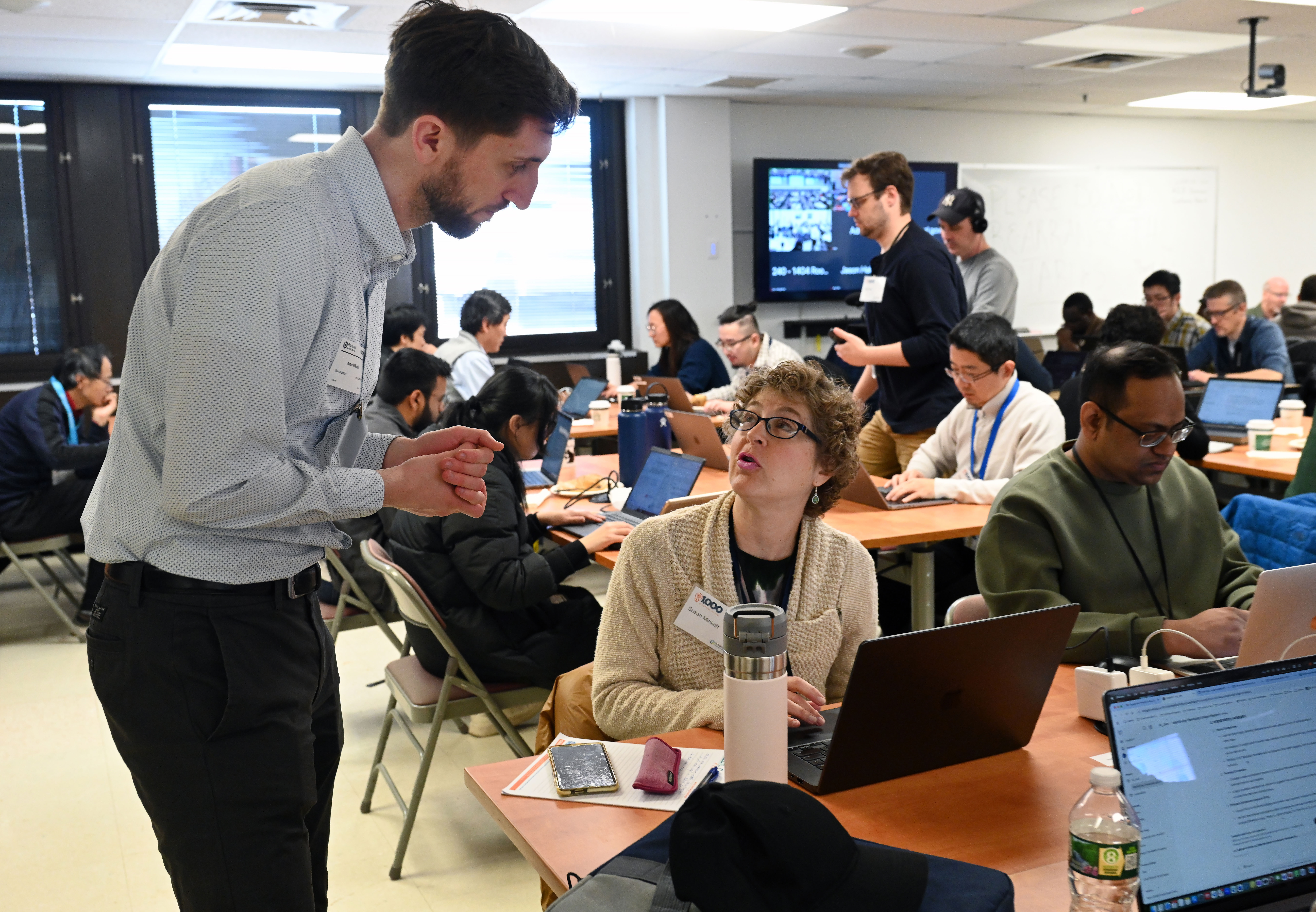

Credit: Kevin Coughlin/Brookhaven National Laboratory

Caption: Aaron Wilkowitz (left), from OpenAI, was on hand to assist users as they applied the "o" series advanced reasoning AI models to their selected problems. Here, Wilkowitz answered some questions from CDS Applied Mathematics Department Chair Susan (Sue) Minkoff (center).

Credit: Kevin Coughlin/Brookhaven National Laboratory

Caption: The 1,000 Scientist AI Jam Session offered an opportunity to educate the community about what artificial intelligence can do to advance science and society. Francis Martin (right), from U.S. Representative Nick LaLota's (NY-01) office, stopped in to check out the AI Jam and toured Brookhaven Lab's Scientific Computing and Data Facilities, accompanied by Brookhaven Lab Director JoAnne Hewett (center), CDS Associate Laboratory Director Nicholas D'Imperio (left), and Deputy Director for Science and Technology and John Hill (not pictured).